Blog | December 9, 2022

Enabling useful quantum advantage with near-term quantum computers

Quantum computers are here to stay. In ten years from now, they will be widely adopted by many industries, as was the case for transistors and lasers invented during the first quantum revolution. But before any far-flung application of the technology can take place, the industry has yet to demonstrate its practical usefulness.

Recently, IBM announced a 433 qubit quantum computer — by far the largest existing on the market. At the same time, the company has reported outstanding improvements on the performance of the processors. While this is an unprecedented advancement in the technology, the computational power of the device remains difficult to harness, due to its susceptibility to errors. Indeed, most of the disruptive quantum algorithms, such as Grover search or Shor’s algorithm, rely on fault tolerance. They cannot be implemented successfully unless the underlying hardware is practically error-free. To reach such a high level of computational accuracy, error-correction algorithms leveraging massive redundancy must be used. Such algorithms achieve practically error-free computing with imperfect devices but require millions of qubits with operational error rates orders of magnitude smaller than current¹ — far beyond the hardware capabilities in the near term.

So there is a long way to go before Quantum Processing Units (QPUs) will reach fault-tolerance for sizes necessary to tackle any relevant computational problems. But do we have to wait until the distant fault-tolerance to reap the reward? Couldn’t we find use for the still evolving devices and strive for use cases and business impact already in the near term?

Roadblocks of near-term quantum computing

In the past ten years a lot of effort has been dedicated into developing algorithms for noisy near-term quantum processors. These efforts culminated a few years back in the demonstration of quantum advantage, in which a quantum processor outperformed best classical computers at a non-trivial (but useless) task². The demonstration later led to the development of an efficient classical method competitive with the performance of the quantum hardware, opening again the race for establishing quantum advantage³.

Moving beyond showing quantum advantage, demonstration of any useful quantum advantage is certainly still missing, even if potential algorithms to this end have been suggested. For example, the variational quantum eigensolver (VQE) or the quantum approximate optimization algorithm (QAOA) are able to use small, noisy devices by reducing the quantum computational load with a hybrid scheme implementing some parts of the algorithm in usual classical processors.

The difficulty, however, lies in scaling such approaches to relevant problem sizes. Existing algorithms work well for very few qubits (<10) but struggle for increasingly larger systems. For VQE, the flagship algorithm for quantum chemistry calculations, this means limiting the application to extremely small molecules easily computable with existing classical methods. If such algorithms are to be useful in the context of e.g. drug development, the scaling to larger qubit numbers would be required.

Indeed, the use of near-term quantum computers for any relevant problem requires solving three roadblocks:

1. High number of measurements needed to determine relevant properties

The reason quantum computers are so powerful is their capability to be in many configurations at the same time. But when we measure to read out the answer of a calculation, we only get one of the possible configurations, randomly. As a consequence, the number of measurements we need to make on the quantum hardware also grows with the number of possible configurations making any computation unacceptably long — a huge bottleneck, especially when quantum hardware access is limited and expensive.

If we want to use near term quantum computers for solving useful quantum chemistry problems requiring some hundreds of qubits, the number of measurement needs to be reduced drastically.

2. Long gate sequences needed to prepare a state on a quantum computer

Algorithms such as VQE, designed for near term quantum computing, are based on a classical subroutine, where a given predefined sequence of operations on the quantum hardware, called gates, is optimised. The specific selection of this sequence influences greatly the performance of the algorithm. Too long sequences are not feasible on near-term quantum hardware, but provide accurate enough estimations of the target quantities to be computed. On the other hand, with too few gates the target quantities cannot be estimated to precisions necessary, for instance, in quantum chemistry simulations. Such predefined sequences require to always compromise between accuracy and gate numbers.

Recently an alternative method for preparing states on a quantum computer was suggested. In this approach, called Adapt-VQE⁴, instead of a predefined sequence, gates are added one by one. Such an approach is able to find very accurate estimations with very short gate sequences. But the approach has a serious downside: in order to decide the gates to be added at each step a large amount of extra measurements are necessary, making the protocol impossible in practice and bringing it back to roadblock number one.

3. High noise mitigation overhead

Errors are unavoidable in any quantum computer. The quantum processor should act as prescribed by the gate sequence that is executed, but in reality, due to various small disturbances (called noise) the system evolves differently than planned. But this problem can be circumvented: as in classical computation, errors can be corrected in the quantum case by adding redundancy, and performing quantum error-correction algorithms. The extra qubits do not really participate in the quantum computation, but have the sole — yet essential — role of correcting the output. However, as discussed earlier, to reach fault-tolerance with such a scheme, massive amounts of redundant qubits are necessary. This is a huge challenge for quantum computing, and will take many years to be achieved.

In contrast to error correction, in noise mitigation the goal is not to perform error-free computations. Instead, the aim is to reduce or eliminate the effect of noise in the estimation of relevant quantities by using the results of several noisy repetitions of the computation together with post-processing. The mitigation, however, comes with a price: The number of additional repetitions grows with increasing processor sizes. Pushing down this noise mitigation overhead is of central importance in scaling up the near term quantum computations.

Road to useful quantum advantage with near-term quantum computers

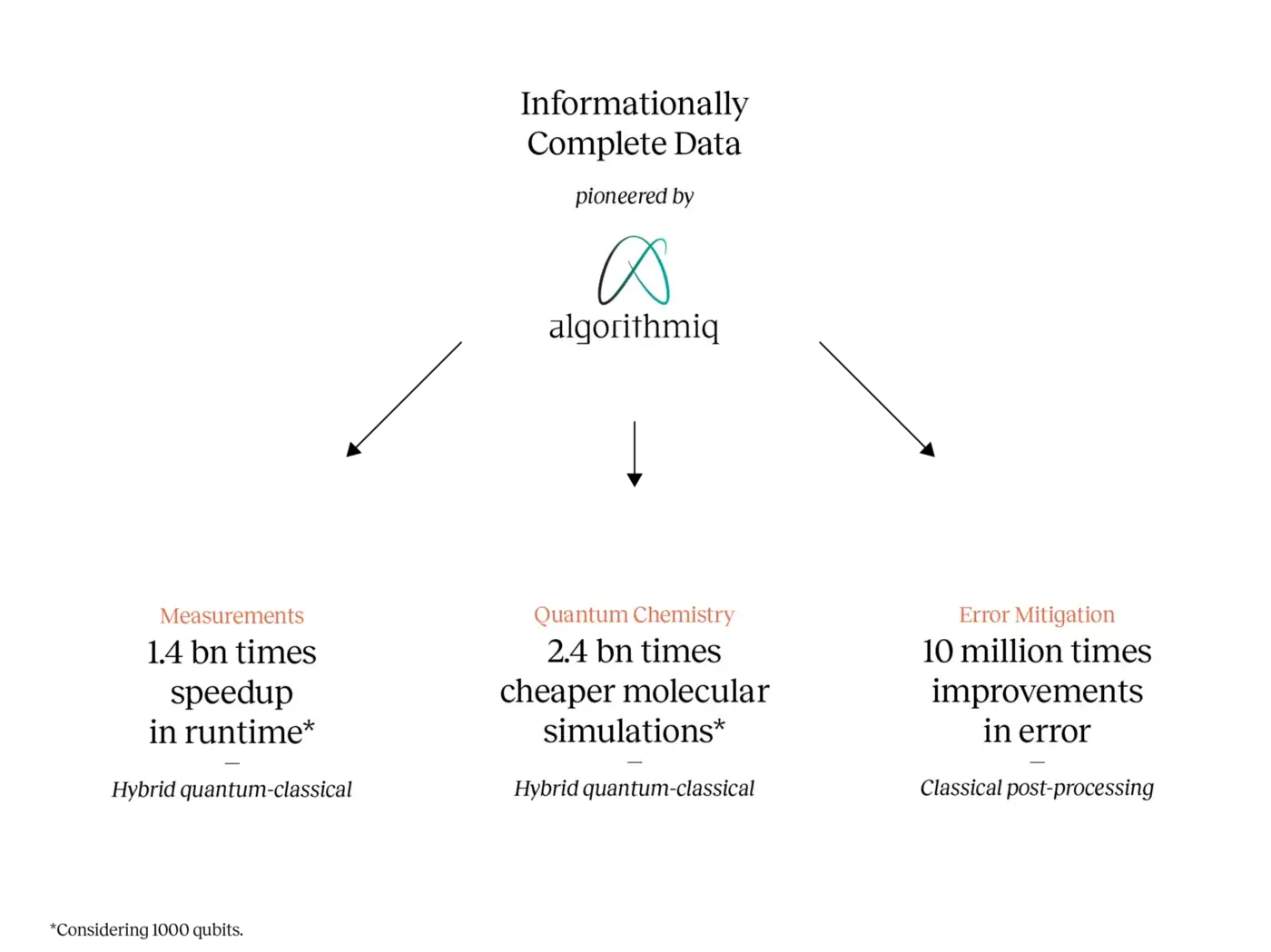

At Algorithmiq we have identified a way to tackle the three bottlenecks of near-term quantum computing, simultaneously. The key enabler in our approach is an interface between the quantum computer and the classical algorithms. This interface is our own major breakthrough. We call it informationally complete measurement data.

As discussed earlier, the number of measurements we need to make on the quantum hardware grows with the number of possible configurations. Current measurement strategies used in quantum computing allow to reconstruct specific properties of the system (for example, its energy), but don’t allow for the estimation of other properties. Informationally complete measurements, in contrast, allow us to estimate several (in fact all) properties at the same time, without additional measurement shots.

The possibility to extract all properties without extra overhead allows us to 1) find an optimal measurement scheme to extract information 2) optimise the number of gates needed to prepare a state on the quantum computer, 3) post-process the output from the quantum computer with best classical algorithms.

In the post-processing step, access to informationally complete data allows us to use state-of-the-art algorithms for tensor networks. Tensor networks offer a very powerful framework for optimising tensor contractions and present the cutting-edge in many computationally heavy fields. They are, for example, the workhorse behind the most powerful algorithms for deep learning⁵ and quantum chemistry⁶. Interestingly, also the simulation of the quantum advantage circuits we discussed earlier were achieved using tensor networks³.

With this carefully thought combination of methods we can show unparalleled performance. Using informationally complete measurements allows us to use VQE and many post-processing algorithms with greatly improved runtime scalings. For example, for determining ground and excited state properties of a molecule on a 1000-qubit quantum computer using VQE together with a post-processing method called quantum subspace expansion⁷, informationally complete measurements can deliver 1.4 billion-fold speed up in runtime. Faster runtimes naturally lead to cheaper simulations — 2.4 billion fold cheaper for the molecular simulation considered above. Further, informationally complete measurements provide an interface to apply proprietary error mitigation techniques powered by state-of-the-art tensor network libraries, that for specific examples have been shown to provide 10 million-fold improvement in error reduction.

Why now is the right time to demonstrate useful quantum advantage?

Quantum computers are becoming larger and larger and increasingly powerful. We now have within our reach for the first time in history all the ingredients to cross the line towards useful quantum computing. With our approach, utilising informationally complete data, we tackle all the three main existing roadblocks to the development of quantum computing at the same time, allowing near term quantum algorithms to be executed efficiently not only for few qubits but for more than a hundred.

Life-sciences is one of the fields that could be strongly impacted by advancements in quantum computing. We are collaborating with IBM to explore how to reduce the time and cost of drug discovery and development using near-term quantum computers.

But showing how our algorithms perform on paper is not enough. We at Algorithmiq want to make the concrete move to the era of useful quantum advantage. That’s why in our recently announced partnership with IBM, we are working together with their in-house experts to go beyond the current limits of practical computation in the context of drug discovery. Combining our runtime-minimising quantum algorithms with IBM’s best-in-class hardware and algorithms will allow us to overcome the limitations posed by current quantum hardware. This is a new era of useful quantum computing, an era of real world applications that hold the potential to revolutionise drug discovery.

¹ Google Quantum AI. “Exponential suppression of bit or phase errors with cyclic error correction.” Nature 595, 383–387 (2021).

² Arute, F., Arya, K., Babbush, R. et al. “Quantum supremacy using a programmable superconducting processor.” Nature 574, 505–510 (2019).

³ Pan, F., Keyang C., and Pan Z. “Solving the sampling problem of the sycamore quantum circuits.” Physical Review Letters 129 090502 (2022).

⁴ Grimsley, H.R., Economou, S.E., Barnes, E. et al. “An adaptive variational algorithm for exact molecular simulations on a quantum computer.” Nature Communications 10, 3007 (2019).

⁵ Novikov, A. et al. “Tensorizing neural networks.” Advances in neural information processing systems 28 (2015).

⁶ Schollwöck, U. “The density-matrix renormalization group in the age of matrix product states.” Annals of physics 326 96–192 (2011).

⁷McClean, J. R. et al. “Hybrid quantum-classical hierarchy for mitigation of decoherence and determination of excited states”, Phys. Rev. A 95, 042308 (2017).

Authors: Algorithmiq Team

©Algorithmiq 2021 | Design by Bitflow